Retrieval Augmented Generation (RAG)

Overview

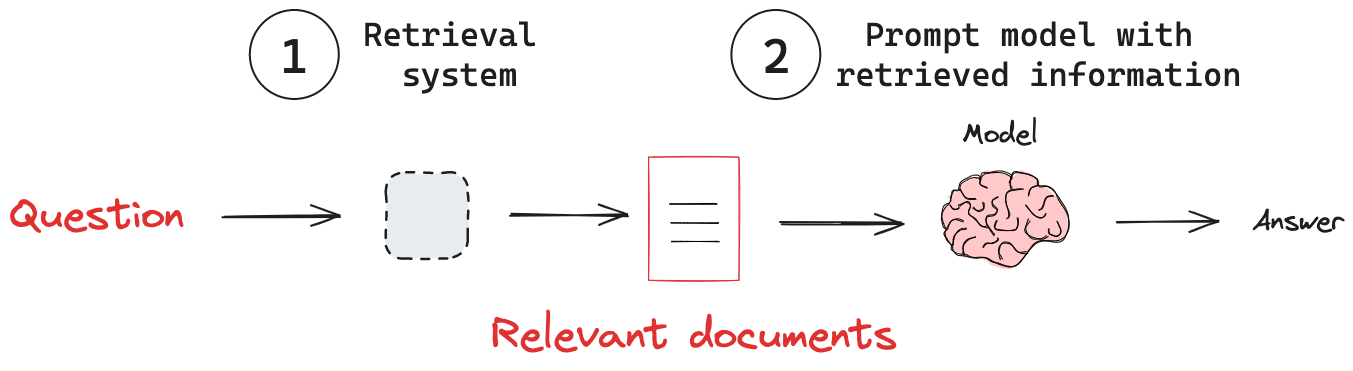

Retrieval Augmented Generation (RAG) is a technique that enhances language models by integrating them with external knowledge sources. RAG addresses a significant limitation of models: they depend on static training datasets, which can result in outdated or incomplete information. When presented with a query, RAG systems first search a knowledge base for relevant data. This retrieved information is then incorporated into the model's prompt. The model uses this context to generate a response to the query. By combining extensive language models with dynamic, targeted information retrieval, RAG is a powerful method for creating more capable and reliable AI systems.

Key Concepts

- Retrieval System: Extract relevant information from a knowledge base.

- Adding External Knowledge: Provide the retrieved information to a model.

Retrieval System

Models have internal knowledge that is often static or infrequently updated due to the high cost of training. This limits their ability to answer questions about current events or provide specific domain knowledge. To address this, various knowledge injection techniques like fine-tuning or continued pre-training are used. Both are costly and often poorly suited for factual retrieval. Using a retrieval system offers several advantages:

- Up-to-date Information: RAG can access and utilize the latest data, keeping responses current.

- Domain-Specific Expertise: With domain-specific knowledge bases, RAG can provide answers in specific domains.

- Reduced Hallucination: Grounding responses in retrieved facts helps minimize false or invented information.

- Cost-Effective Knowledge Integration: RAG offers a more efficient alternative to expensive model fine-tuning.

See our conceptual guide on retrieval.

Adding External Knowledge

With a retrieval system in place, we need to pass knowledge from this system to the model. A RAG pipeline typically follows these steps:

- Receive an input query.

- Use the retrieval system to search for relevant information based on the query.

- Incorporate the retrieved information into the prompt sent to the LLM.

- Generate a response that leverages the retrieved context.

As an example, here's a simple RAG workflow that passes information from a retriever to a chat model:

import { LanguageModelV1 } from "ai";

import { createOpenAI } from "@ai-sdk/openai";

const openai = createOpenAI({

apiKey: process.env['OPENAI_API_KEY'], // This is the default and can be omitted

});

const model = openai("")

// Define a system prompt that tells the model how to use the retrieved context

const systemPrompt = `You are an assistant for question-answering tasks.

Use the following pieces of retrieved context to answer the question.

If you don't know the answer, just say that you don't know.

Use three sentences maximum and keep the answer concise.

Context: {context}:`;

// Define a question

const question =

"What are the main components of an LLM-powered autonomous agent system?";

async function main() {

const stream = await streamText({

model: 'gpt-4o',

messages: [

{ role: 'system', content: systemPrompt },

{ role: 'user', content: question }

],

temperature: 1

})

// Generate a response

for await (const chunk of stream) {

process.stdout.write(chunk.choices[0]?.delta?.content || '');

}

}

main();